Forum Bot & Chat Bot with Azure OpenAI

Published:

GitHub Link: https://github.com/samuellee77/azure-openai-chat-forum-bot

Introduction

The Azure OpenAI Chat Forum Bot leverages the capabilities of OpenAI’s language model to create an interactive and engaging forum bot. With this bot, you can provide an enhanced user experience within your forum community by enabling dynamic and relevant discussions. This repository contains two parts, which are forum bot (on Discourse) and chat bot (on website).

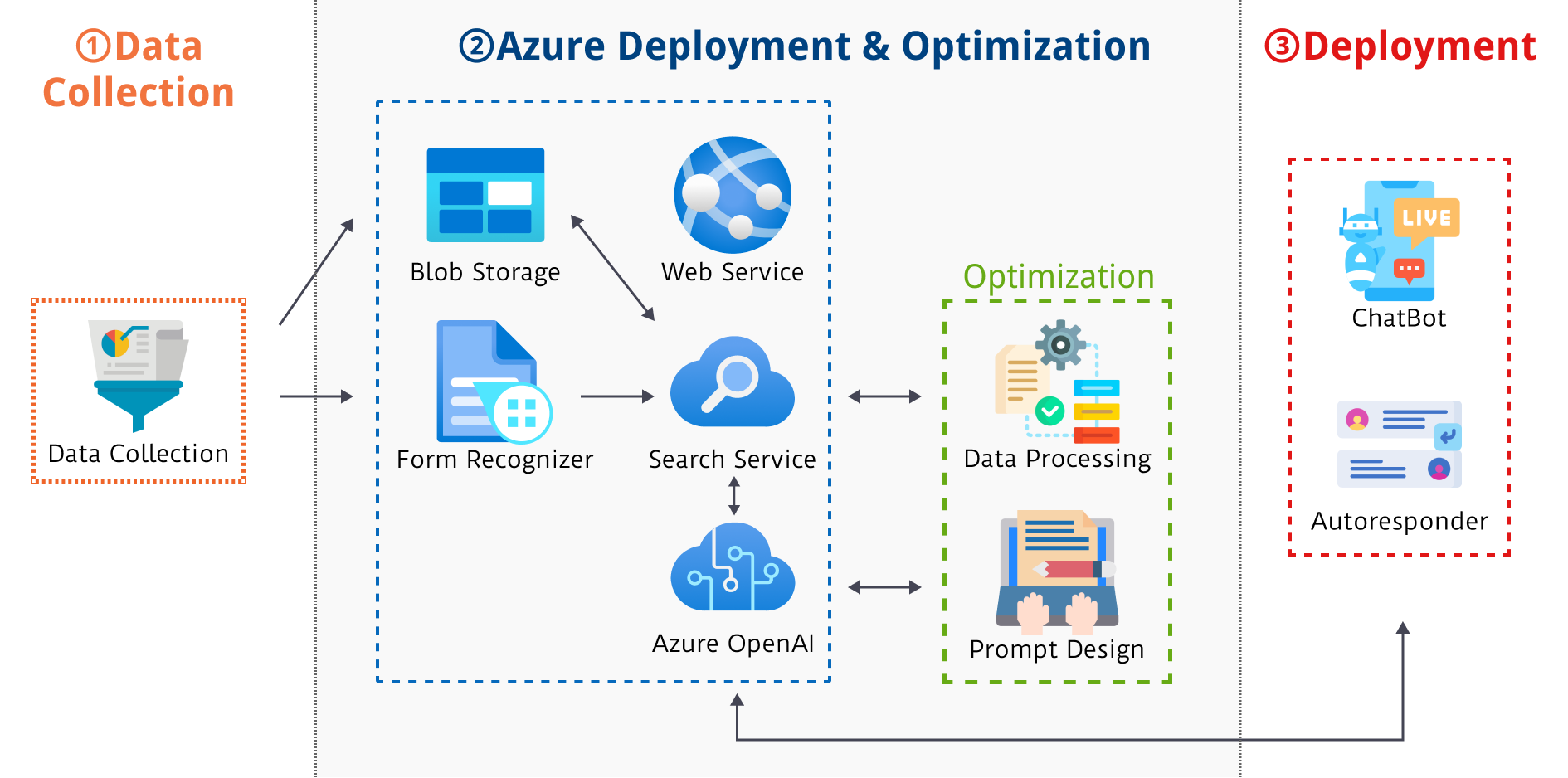

Enhanced Framework for Data Preparation and Application Deployment

This framework is designed to efficiently process raw data and deliver accurate, context-driven answers to user queries by leveraging Azure’s data services alongside OpenAI’s powerful language models. The framework is divided into two key phases: Data Preparation and Application Workflow.

Data Preparation

1. Data Collection

The objective of the data collection process is to gather all necessary content that can inform and answer potential user questions. In this phase, data is collected from a variety of sources, including documents, websites, logs, and any other repositories relevant to the domain. Collecting diverse and high-quality data at the outset is essential, as it lays the foundation for generating accurate and comprehensive responses in later stages of the application.

2. Text Splitting and Section Indexing

The objective of the text splitting and section indexing step is to break down large documents into smaller, manageable segments that can be easily searched and retrieved. This process involves dividing lengthy texts into coherent sections or paragraphs, where each segment represents a distinct idea or topic. Each segment is then tagged with metadata, such as keywords, section titles, or document IDs, to facilitate efficient indexing. Organizing the data in this way enhances search precision and significantly speeds up data processing during query execution.

3. Uploading to Azure Services

The objective of this step is to securely store and index the prepared data using Azure services, ensuring that it is both durable and easily accessible. The segmented data is first uploaded to Azure Blob Storage, which serves as a scalable and cost-effective repository. Following this, the data is indexed within the Azure Search Service, which enables rapid retrieval of relevant text segments based on user queries. This dual-upload strategy leverages the strengths of both Azure Blob Storage and Azure Search Service to optimize the data preparation process.

Application Workflow

1. User Query Submission

The objective of the user query submission step is to provide a user-friendly interface where users can easily enter their questions. When a user has a question, they interact with the application’s intuitive interface, which is designed to make the process of submitting queries as simple and straightforward as possible. This ensures that users can articulate their needs quickly and without confusion.

2. Backend Request Handling

The objective of the backend request handling step is to seamlessly transmit the user’s query from the front-end application to the backend server for further processing. Once the query is submitted, it is securely sent via an API call to the backend server. This process may include initial preprocessing steps, such as language normalization and tokenization, which help to clarify and structure the query before it is used to search the data repository.

3. Retrieval of Relevant Text Sources via Azure Search

The objective of this step is to identify and extract the most pertinent text segments that relate to the user’s query. The backend leverages Azure Search Service to perform a full-text search across the indexed data, using advanced search techniques such as ranking algorithms and semantic search features. This ensures that only the most relevant sections of text are retrieved, providing a solid foundation for generating a contextually accurate response.

4. Response Generation Using OpenAI Model

The objective of the response generation step is to create a comprehensive and context-aware answer by synthesizing the relevant text sources. In this stage, the retrieved text segments are sent to an OpenAI model, which processes the input, understands the context of the query, and generates a clear and informative answer. By leveraging the advanced natural language processing capabilities of the OpenAI model, the system is able to produce responses that are both accurate and contextually rich.

5. Delivery of the Answer

The objective of the delivery step is to present the generated response to the user in a clear and accessible format. After the answer is generated, it is returned to the front-end application, where it is promptly displayed to the user. This step completes the query-response cycle and may also provide additional context or links to the original sources, allowing users to explore the information further if desired.

Strengths

Data Privacy and Security

One of the primary strengths of utilizing Azure OpenAI is its strong commitment to data privacy and security. Azure OpenAI guarantees that all stored data remains secure and is not repurposed for training future models. This assurance means that any sensitive or proprietary information uploaded into the system is protected from unauthorized use, which is a crucial consideration for businesses dealing with confidential data. By ensuring that the data will not be used to further train models, organizations can confidently use the platform knowing that their information remains isolated and safe.

Cost-Efficient Custom LLM Chat Bot Development

A significant advantage of this solution is its cost efficiency in building a custom Large Language Model (LLM) chat bot. Leveraging Azure OpenAI provides a streamlined approach where businesses can deploy powerful chat bot solutions without the prohibitive costs associated with training a new model from scratch. Traditional fine-tuning or developing an entirely new model can be expensive due to the substantial computational resources and time required. In contrast, using pre-trained models that can be quickly adapted to specific needs drastically reduces both time-to-market and overall costs. This affordability makes advanced AI capabilities accessible to a broader range of organizations, from startups to large enterprises.

Automated Data Preparation Process

The framework also excels in automating the entire data preparation process, significantly simplifying what is typically a complex and time-consuming task. Users only need to download their data into the designated data folder. After placing the data in the correct location, running the /script/prepdocs.sh script initiates an automated workflow that handles all necessary preprocessing tasks. This script splits the data into manageable segments, indexes them correctly, and subsequently uploads the prepared content to the respective Azure services. The automation minimizes human intervention and the risk of errors, ensuring consistency and reliability throughout the data preparation process. This streamlined approach not only accelerates the deployment of the chat bot but also enhances the system’s overall efficiency and responsiveness.

Conclusion

The Azure OpenAI Chat Forum Bot integrates advanced AI with robust Azure data services to deliver secure, efficient, and cost-effective interactive experiences. By automating data collection, segmentation, indexing, and uploading, the system ensures rapid and accurate query responses while maintaining strict data privacy. This streamlined approach minimizes manual intervention, reduces costs, and enhances user engagement across forums and websites.